In turn, we use cookies to measure and obtain statistical data about the navigation of the users. You can configure and accept the use of the cookies, and modify your consent options, at any time.

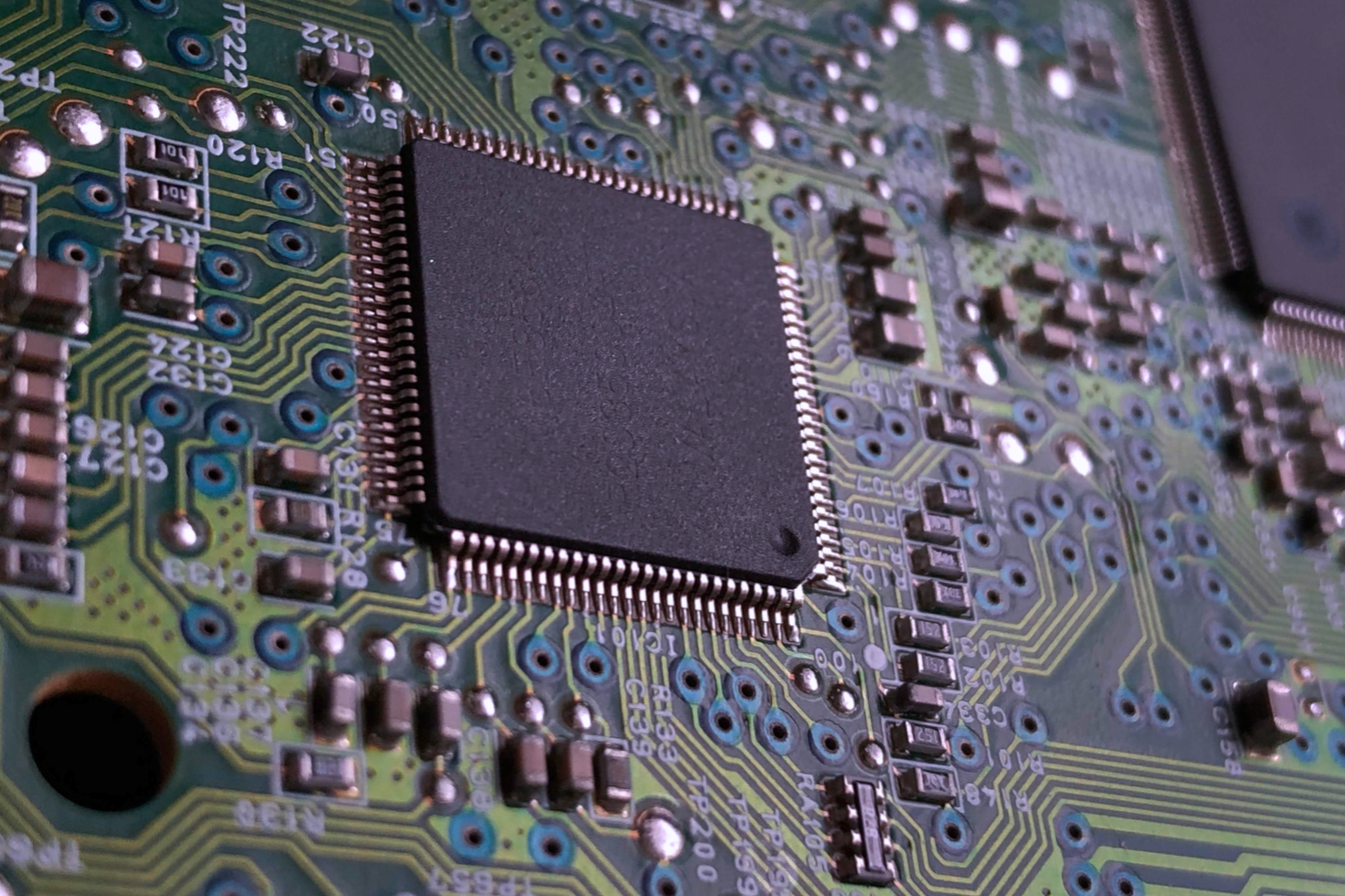

The End of Moore’s Law

Silicon computer chips are nearing the limit of their processing capacity.

But is this necessarily an issue?

Most of us are familiar with Moore’s Law. Gordon Moore, who first observed that computer capacity grew exponentially, was talking about the density of transistors in integrated circuits, but others (among them the famous futurist Ray Kurzweil) have later reformulated the ‘law’ to refer to the growth of processing power compared to cost – how many calculations per second a computer chip can perform for every USD 100 it costs. This makes the law robust to changes in technology, extending it backwards to before the invention of the integrated circuit and possibly forwards into the future when new technologies may replace the current siliconbased chip.

Such replacement technologies may soon be needed, as we are near the end of what we can achieve with silicon chips. The performance of single-processor cores increases has slowed down considerably since the turn of the millennium. In the 15 years from 1986 to 2001, processor performance increased by an average of 52 percent per year, but by 2018, this had slowed to just 3.5 percent yearly – a virtual standstill.

There are several reasons for this slowdown. The most important one is that we are nearing the physical limit for how small transistors can be made and how densely they can be packed in integrated circuits. This means an end to Dennard scaling – another computer ‘law’, which states that as transistors become smaller, their energy requirements also shrink, making the energy need per area roughly constant even as transistors are packed more densely. If we want to add more transistors to a chip now, it requires both more space and more energy.

We can instead increase the clock speed of our computers, the pace at which calculations are performed by transistors. Above a certain pace, however, energy needs and hence heat output start to rise rapidly as the clock speed is increased, in practice limiting this method of increasing processing power. This is the reason why CPU clock speeds have all but stopped growing over the past 15 years, particularly for portable computers that can’t easily be equipped with large fans. Most laptops today don’t even have fans because their hard disks have been replaced by SSD cards, greatly reducing their overall energy needs and hence heat output – but increasing their clock speed or packing more cores into their CPU will increase their heat output. This would make it very uncomfortable to have your laptop in your lap or hold your smartphone in your hand.

The still-impressive advances in computer capacity in the past fifteen years are mainly due to packing more cores into processors. Fifteen years ago, typical personal computers had just one core; now we have dual-core, quad-core and even octo-core CPUs. However, adding more cores is not the same as increasing clock speed. Basically, each core runs as a separate processor, which is only helpful if the computer’s tasks can be split between several cores running in parallel. While a single program could feasibly have several tasks (or threads, in technical terms) running in parallel, threads will often have to wait for results from other threads, reducing efficiency. This reduction in efficiency naturally increases with the number of threads running in parallel, which means that doubling the number of cores, and with it the energy input and heat output, will not double the effective capacity of our computer, except for very specialised tasks like graphics. Graphics processing units (GPUs) do, indeed, run a lot of tasks in parallel, updating different parts of your screen simultaneously – but once you reach the best resolution you need (HD? 4K? 8K?), you cease to get any further gains from running more tasks in parallel.

Supercomputers have achieved faster growth rates than personal computers. Currently, the top calculating capacity of supercomputers is growing by about 40 percent per year, corresponding to one thousand times the capacity in 20 years. Less than a decade ago, however, this growth rate was 80 percent a year, and it took just 11 years to achieve a thousand times the processing power. This is because supercomputers are made by packing a lot of ordinary computer chips together. Top supercomputers today are basically multi-million core PCs. Once the computer chips stop improving, you need to pack more of them together, and this becomes increasingly difficult to manage, not to mention requiring more energy for running and cooling the supercomputer.

As we can see from the above, we are reaching the end of what we can achieve with existing chip technology. By the original definition of doubling the transistor density on a chip every two years, Moore’s Law has already been dead for a decade or more. However, there may be other ways to achieve growth in computer performance in the coming decades.

For one thing, we can pack transistors more layers deep, which will reduce chip size. This is already being done in memory storage chips, where transistors are stacked 128 layers deep or more, etched in one go. Such compact chips are nice for small devices like smartphones or the growing number of wearable devices, not to mention the myriad of small, internet-connected devices powering the Internet of Things. However, dynamic random- access memory (DRAM) is more difficult to layer deeply, since almost every part is powered all the time, leading to energy and heating issues – but solutions to these problems are being investigated.

It may also be possible to use artificial intelligence to design more efficient chip architecture using current chip technology. With three-dimensional chips, in particular, AI may be able to make better use of the extra dimension than by simply layering flat architecture, and AI can make use of machine learning to find where optimisation is most required and how to achieve it. With evolutionary algorithms, AI can design different solutions and allow them to compete against each other in an artificial environment, then let the best designs mutate and compete again, generation after generation, until after thousands of generations a superior design is found that doesn’t perceptively improve with further mutation. This design may be unlike anything a human mind could devise, but will be demonstrably better than earlier designs in terms of speed, energy efficiency and ease of cooling.

Radical new technologies can also increase processing capacity. Superconducting computers can run on very little energy, with correspondingly small heat output, allowing very dense threedimensional chip architecture. Quantum computers, still in their infancy, can in theory handle certain types of tasks far more quickly than traditional computers (achieving quantum supremacy), solving in seconds some problems that the fastest ordinary computer couldn’t finish in the lifetime of the universe. However, both require cooling to extremely low temperatures, which makes them impractical for desktop computers, not to mention portable devices. Other new technologies are theoretically possible but may be too expensive to be practical.

We could ask if all the computing power a personal computer or smartphone needs must reside in the device itself. Cloud computing has been common for quite a while, allowing devices to outsource processing and storage to external computers and servers. It is more practical to power and cool a small number of large, centralised computers and servers than every little household or pocket device, and users don’t have to worry if their devices are lost or broken if all their important data are stored in the cloud.

However, connection speeds are an issue, especially if a lot of data are sent back and forth all the time. After all, it takes more time for a request to travel hundreds of kilometres over the internet than across a few millimetres inside a CPU – an issue that grows in importance with rising processing speed. A partial solution to this is edge computing, where devices access storage and processing power located near the user. Edge computing devices may handle all the processing and data storage, or they could function as gateway devices that process data before they are sent to storage in the cloud. The Internet of Things, in which many devices send large volumes of data to be analysed in real time, will have to rely on edge computing to reduce lag. 5G wireless communication can aid in this by offering greater bandwidth and shorter lag than 4G, and it may well be that 5G will be used mainly by IoT devices and not so much by personal devices like smartphones and desk computers, where 4G is likely to remain sufficient for most users, except perhaps extreme gamers. With cloud and edge computing, your devices only need to have enough processing power to handle the most common everyday tasks, with peaks of processing use outsourced to external servers. This, of course, requires greater reliance on connection stability, and security is always weaker when data leaves the building.

Ultimately, we must ask how much processing power a typical user really needs. Barring the most graphics-heavy computer games, a half-decade old computer today can easily handle most of the things it is required to do, whether at home or at work, and with terabyte capacity, storage space has ceased to be a problem for most users. It is hard to imagine that we will demand much higher screen resolution than what high-end devices offer today. Realistic virtual reality can probably be achieved by personal devices in a decade or so, even with current slow advances in processing power – any lag is likely to be the result of poor connections, rather than slow processing. Creating artificial intelligence capable of handling most human tasks (that we feel comfortable having AI handle) is probably more a question of design than of processing power. In the end, it may be that our personal smart devices will soon cease to become faster – not because we can’t make them faster, but because we don’t need them to be.

This article was featured in Issue 63 of our previous publication, Scenario Magazine.