In turn, we use cookies to measure and obtain statistical data about the navigation of the users. You can configure and accept the use of the cookies, and modify your consent options, at any time.

When Models Mislead

Climate economists are playing dice with our future. Some are now sounding the alarm that the models they rely on are fundamentally flawed.

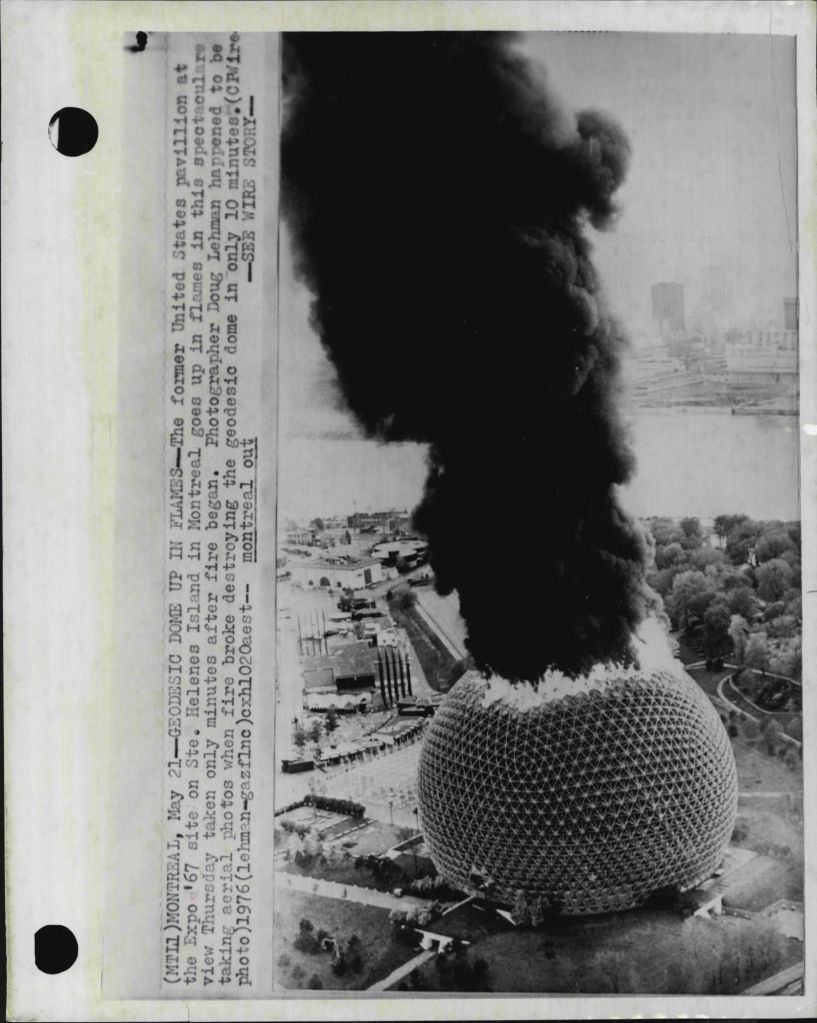

Image: 1976 fire at Biosphere 1 in Montreal, designed by Buckminster Fuller for the Expo 67. Credit: Doug Lehman.

Humans love numbers – especially when they’re gathered as data, and especially when that data promises to predict observable reality. Today, we have more data available to us than ever before, and in turn increasingly better and more complex models of social, economic and environmental change. The temptation here is to say that our understanding of the world is increasing exponentially.

Exponentiality is a commonly encountered concept within the field of economic theory – but only relatively recently. The earliest classical economists – Adam Smith, for example, or John Stuart Mill – believed to some extent that growth is finite. Mill in particular wrote that once the upper limit of finite resources, such as land, had been reached, humanity may have ‘room for improving the art of living’.

Broaden your horizons with a Futures Membership. Stay updated on key trends and developments through receiving quarterly issues of FARSIGHT, live Futures Seminars with futurists, training, and discounts on our courses.

become a futures memberThe idea of infinite economic growth first became mainstream after the widespread adoption of the GDP model following the Second World War. As international competition increased, economists like Robert Solow developed new economic models that emphasised labour, capital, and increases in productivity driven by technological progress rather than the finitude of natural resources. Solow eventually received the Nobel Prize in economics for his work on the ‘Solow-Swan model’, concerning the intersections of technology, productivity and growth.

When the Club of Rome released the 1972 report The Limits to Growth, which predicted societal collapse as an outcome of exponential growth on a planet with finite resources, Solow and others like him dismissed it thoroughly. He even once told a US Congressional committee that the real world is so complex that prediction more than twenty years into the future is foolhardy. Solow argued that climate activists ought to focus on pollution rather than growth, believing that stagnant societies could still generate waste and therefore bring about ecological collapse. Moreover, models tasked at determining a ceiling to economic growth should assume a feedback loop of increased GDP and productivity bringing with it new technology that can mitigate the effects of pollution. What this system of thinking did not account for was climate change.

Today, much of our climate legislation is based on data and models. We have all heard about the 1.5C warning, the Paris Agreement, and the regularly tossed about figure that the world’s top 50 most polluting corporations contribute more emissions combined than the rest of the world. We’re still in love with the safety and surety of numbers, even if they’re telling us the end is nigh.

However, the challenge of bridging the gap between climate data, modelled scenarios, and effective policy – particularly given our incomplete understanding of Earth’s climate systems – has led some scientists to sound the alarm.

Although scientists are able to relatively accurately forecast the trajectories of climate change, the climate is also changing in ways the models cannot predict. The breaking temperatures of 2023 came out of the blue, reaching far above the uncertainty range forecasted. 2024 has broken some of those records yet again. While the second half of the twentieth century may have lulled us into a false sense of security with regards to the accuracy of our models – between 1977 and 2003, for example, ExxonMobil scientists generated shockingly predictive climate forecasting which was never released publicly – the past decade seems to have seen a slow creep in the rate of unforecasted events.

Most of our up-to-date climate models regularly present uncertainty incompatible with what’s demanded by policymaking. This is especially clear when considering the risk of collapse of some of Earth’s unpredictable systems, such as the AMOC-stream in the Atlantic Ocean, which keeps Europe from becoming an uninhabitable frozen tundra. Other examples of climate tipping points include the melting of the Greenland ice sheet, Amazon deforestation, and coral reef death. Quantifying the exact level of greenhouse-gas emission required to push these systems to their breaking points is an impossible task at present. Yet each tipping point may set off a phase shift an unpredictable chain reaction in Earth’s climate.

Policymakers do not like such black boxes. When crafting a policy addressing climate change, they rely on models like the Social Cost of Carbon (SCC), which measures the economic damages of each additional ton of CO2 released into the atmosphere. The resulting calculation can then be used to determine the value of any policy measure taken – a cost-benefit analysis of things like building infrastructure, setting carbon taxes, or reforestation initiatives.

When a politician argues that a proposed environmental regulation would excessively harm the economy, they usually base their claim on estimations from models like the SCC. A central input to these models is the ‘discount rate’, a predetermined value used by economists and policymakers to estimate the present-day cost of future climate damages. The lower the discount rate a country adopts, the more expensive it becomes for that country to avoid addressing climate change, and the less it discounts the interests of future generations in favour of those in the present.

Around the world, economists use discount rates and climate models to determine the value of decisions made now in the future. This means that calculating theexact value of a discount rate becomes, to some extent, an ideological position – valuing short term economic growth versus the uncertainty of long-term environmental impact.

In the 1960s, Robert Solow supervised the PhD of a young economist named William Nordhaus, who now holds the Nobel Memorial Prize in Economic Sciences for his climate-modelling work. Nordhaus’ career has seen him placed in one of the most influential positions possible in global climate policy. He and his models have the ear of the IPCC (the Intergovernmental Panel on Climate Change), the US government, think tanks, institutions, economists and financiers the world over.

Nordhaus’ primary climate model is called DICE (Dynamic Integrated Climate Economy) and calculates climate impact through its effect on GDP. DICE’s success is a result of it being one of the first and only models to provide policy-relevant insights into global environmental change and sustainable development issues, by providing a quantitative description of key processes in the human and Earth systems and their interactions.

Explore the world of tomorrow with handpicked articles by signing up to our monthly newsletter.

sign up hereDespite its influence, the DICE model has prompted criticism from several of Nordhaus’ contemporaries. One point of critique is that by not accounting for the deep uncertainty of climate tipping points, DICE’s determination of future value is based on data that is fundamentally unable to account for some of the biggest climate risks we face.

The Solow system of thought – that is, that natural resources are a factor interchangeable with labour, productivity, technological forces – underpins the Nordhaus models which inform global climate policy today. This results in the curious language in some of his published papers – one example being that in a scenario of 6C global warming, we should expect ‘damages’ of between 8.5 and 12.5% to world GDP over the course of the 21st century. Nicholas Stern, of the Grantham Research Institute on Climate Change and the Environment at the London School of Economics and Political Science, responded to this claim in the Economic Journal by emphasising the actual human horror beyond mathematical value: Nordhaus’ model does not take into account that at 6C of warming, there would be ‘deaths on a huge scale, migration of billions of people, and severe conflicts around the world’.

Stern is one of a growing number of Nordhaus’ contemporaries who see the combination of a discount rates system (based on neoclassical ‘infinite growth’ modelling, and therefore resulting in high discount rates) and the growing complexity of climate modelling as steering us towards planetary destruction. To some extent, they see the situation as comparable to the belief in the system that resulted in the 2008 global financial disaster; another house of cards built on economic modelling that assumed infinite growth.

Joseph E. Stiglitz, a Nobel Laureate and former chief economist at the World Bank, is another vocal opponent of the Nordhaus approach. In 2023, along with Stern, he told The Intercept that Nordhaus “doesn’t appropriately take into account either extreme risk or deep uncertainty” and branded his projections “wildly wrong”. He has repeatedly condemned Nordhaus’ assertion that in theory, allowing warming of up to 3.5C is economically optimal for GDP – despite this temperature threshold being widely considered in scientific literature to result in mass-scale societal disruption and climate tragedy.

If this unnerves you, it should. From a political perspective, we are flying blind into an increasingly uncertain climate future which current policymaking instruments cannot accommodate. Global governance has put its trust into a shallow understanding of climate economics to ensure we are doing the right thing, because surely, we are putting so much effort into our data collection and modelling that the numbers can’t lie.

The allure of numbers and data is undeniable; they offer a sense of control and predictability in an otherwise chaotic world. However, the reality of climate systems – and the damage caused in the event of their breakdown – is far more complex than these models capture. While economists debate through published papers, the general public – and future generations who will bear the consequences – are largely excluded from the conversation.

In a better, possible world, acknowledging the deep uncertainty inherent in climate dynamics would translate into policies that are robust enough to withstand a range of possible outcomes, including those we cannot foresee. In a governance context, this is known as the ‘Precautionary Principle’, or the application of foresight to governance, and the UN’s 1992 Rio Declaration on Environment and Development states “where there are threats of serious or irreversible damage, lack of full scientific certainty shall not be used as a reason for postponing cost-effective measures to prevent environmental degradation.” The Precautionary Principle is a fundamental rejection of the notion that uncertainty should result in inaction.

When the stakes are existential for events of high-risk and high-uncertainty, humans will have to reconsider their beloved relationship to data. The future of our planet cannot depend on the comfort we find in numbers and models based on endless increases in GDP, but instead on our willingness to confront the unpredictability and risk tied to our collective existence with policies that prioritise long-term resilience and true understanding of how complex climate is.

Get FARSIGHT in print.